- #AIRFLOW 2.0 GITHUB INSTALL#

- #AIRFLOW 2.0 GITHUB UPDATE#

- #AIRFLOW 2.0 GITHUB UPGRADE#

- #AIRFLOW 2.0 GITHUB FULL#

- #AIRFLOW 2.0 GITHUB SOFTWARE#

Proven core functionality for data pipelining.

#AIRFLOW 2.0 GITHUB SOFTWARE#

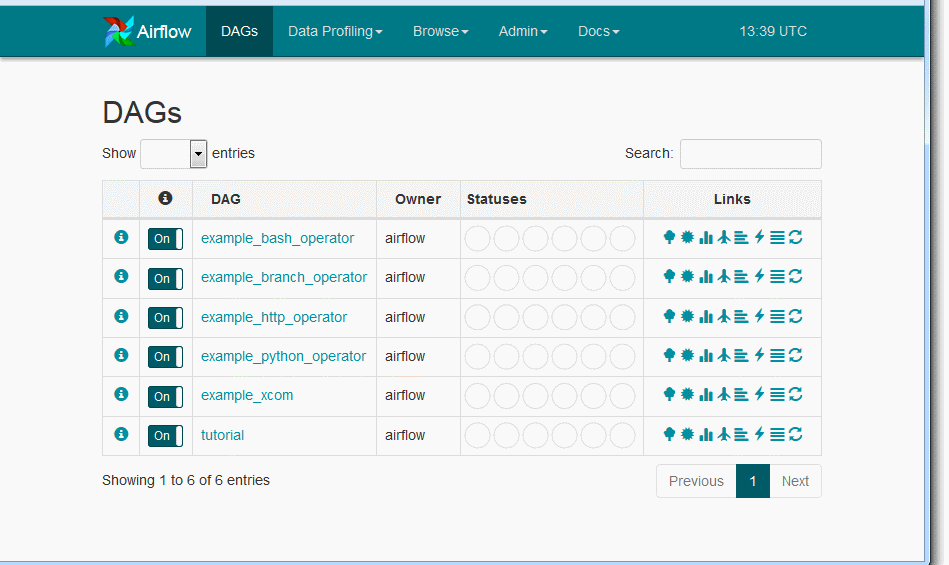

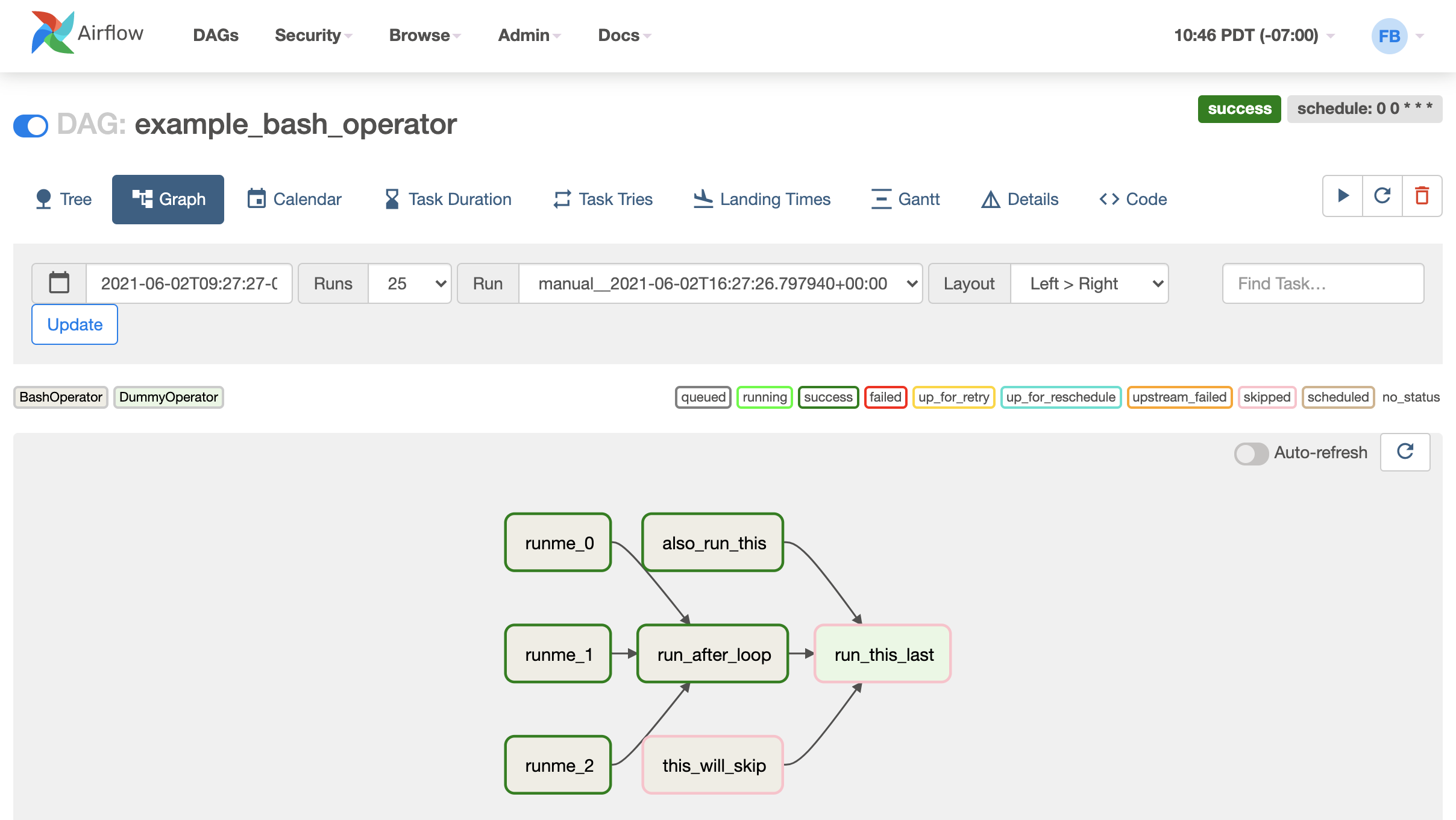

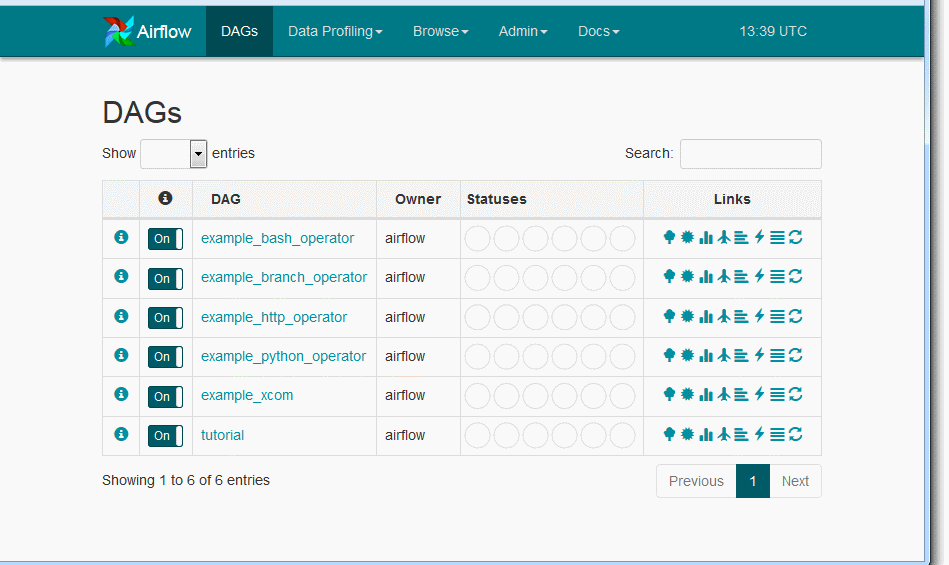

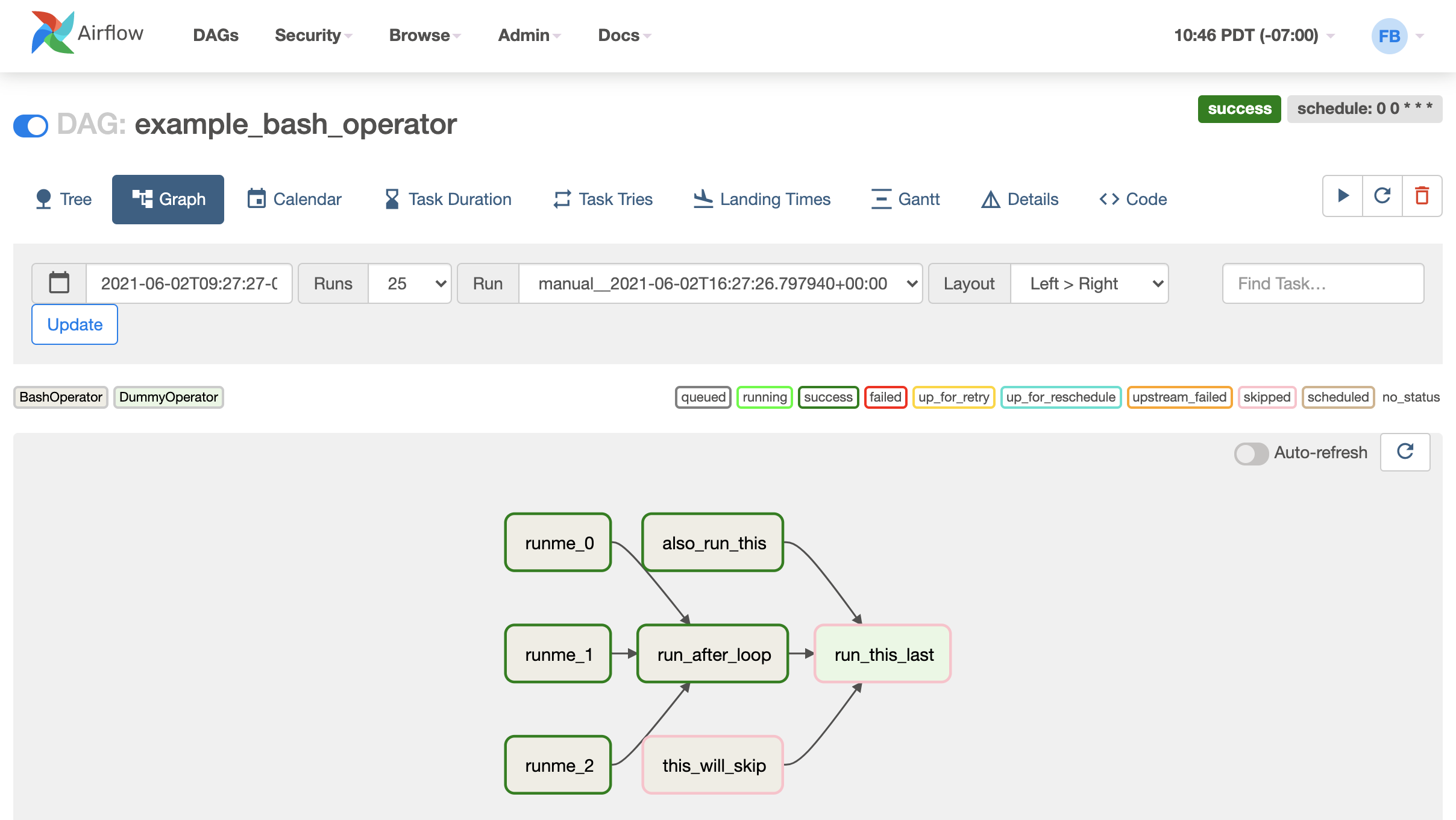

It was brought into the Apache Software Foundation’s Incubator Program in March 2016, and saw growing success in the wake of Maxime’s well-known blog post on “The Rise of the Data Engineer.” By January of 2019, Airflow was announced as a Top-Level Apache Project by the Foundation, and it is now widely recognized as the industry’s leading workflow orchestration solution.Īirflow’s strength as a tool for dataflow automation has grown for a few reasons: If you’d like to learn more about the latest features, head over to the articles about Airflow 2.2 and Airflow 2.3.Īpache Airflow was created by Airbnb’s Maxime Beauchemin as an open-source project in late 2014. Note: This article focuses mainly on Airflow 2.0. If your team is running Airflow 1 and would like help establishing a migration path, reach out to us.

#AIRFLOW 2.0 GITHUB UPGRADE#

We strongly encourage your team to upgrade to Airflow 2.x.

#AIRFLOW 2.0 GITHUB INSTALL#

Install pre-commit hooks for linting, format checking, etc.Note: With the release of Airflow 2.0 in late 2020, and with subsequent releases, the open-source project addressed a significant number of pain points commonly reported by users running previous versions.

Rebuild docker image if requirements changed: docker-compose build. tests/airflow "pytest -cov=gcp_airflow_foundations tests/unit Authorize gcloud to access the Cloud Platform with Google user credentials: helpers/scripts/gcp-auth.sh. send env var PROJECT_ID to your test project. uncomment line 11 in docker-composer.yaml. Default authentication values for the Airflow UI are provided in lines 96, 97 of docker-composer.yaml. Run Airflow locally (Airflow UI will be accessible at docker-compose up.

gitignore, and will not be push to the git repo)

Create a service account in GCP, and save it as helpers/key/keys.json (don't worry, it is in. #AIRFLOW 2.0 GITHUB UPDATE#

Update the gcp_project, location, dataset values, dlp config and policytag configs with your newly created values.Create a BigQuery Dataset for the HDS and ODS.Enable: BigQuery, Cloud Storage, Cloud DLP, Data Catalog API's.In order to have them successfully run please ensure the following: Sample DAGs that ingest publicly available GCS files can be found in the dags folder, and are started as soon Airflow is ran locally. See the gcp-airflow-foundations documentation for more details.

#AIRFLOW 2.0 GITHUB FULL#

Pip install 'gcp-airflow-foundations ' Full Documentation

Well tested - We maintain a rich suite of both unit and integration tests. Integration with GCP data services such as DLP and Data Catalog. Support of advanced Airflow features for job prioritization such as slots and priorities. Dataflow job support for ingesting large datasets from SQL sources and deploying jobs into a specific network or shared VPC. Opinionated automatic creation of ODS (Operational Data Store ) and HDS (Historical Data Store) in BigQuery while enforcing best practices such as schema migration, data quality validation, idempotency, partitioning, etc. Adding a new source can be done by extending the provided base classes. Modular and extendable - The core of the framework is a lightweight library. Zero python or Airflow knowledge is required. Zero-code, config file based ingestion - anybody can start ingesting from the growing number of sources by just providing a simple configuration file. We have written an opinionated yet flexible ingestion framework for building an ingestion pipeline into data warehouse in BigQuery that supports the following features: Core reusability and best practice enforcement across the enterprise: Usually each team maintains its own Airflow source code and deployment. Datalake and data pipelines design best practices: Airflow only provides the building blocks, users are still required to understand and implement the nuances of building a proper ingestion pipelines for the data lake/data warehouse platform they are using. Most companies end up building utilities for creating DAGs out of configuration files to simplify DAG creation and to allow non-developers to configure ingestion Further, writing Python DAGs for every single table that needs to get ingested becomes cumbersome. Learning curve: Airflow requires python knowledge and has some gotchas that take time to learn. However, most companies using it face the same set of problems

Airflow is an awesome open source orchestration framework that is the go-to for building data ingestion pipelines on GCP (using Composer - a hosted AIrflow service).

0 kommentar(er)

0 kommentar(er)